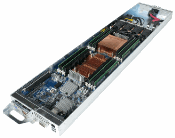

Thank you everybody for coming! I was talking to somebody earlier in the room and they had not seen an OCP server. This is a fully functional OCP server [Image on left], it has two processors, memory, one SSD for your operating system, and a networking card.

Thank you everybody for coming! I was talking to somebody earlier in the room and they had not seen an OCP server. This is a fully functional OCP server [Image on left], it has two processors, memory, one SSD for your operating system, and a networking card.

The Open Compute Project talks about serviceability, this whole server requires only one finger to lift it. In addition to talking about Open Compute and networking today, I'm going to talk about computing and storage.

About Penguin Computing

Penguin Computing started 20 years ago on the same supposition of the LAMP stack; Linux, Apache, MySQL, disaggregating Sun, Solaris and UNIX. We've been doing Linux systems for almost 20 years, focusing from Data Centers to High Performance Computing.

We’re located in Fremont and have the capability to manufacture 5,000 units per month and we’ve deployed in over 50 countries. We're started our global expansion about 3 or 4 years ago and we found EPS Global through Finisar, as they’re Finisar’s Global Distributor of the Year for 4 consecutive years, and we're proud to have them as one of our worldwide partners!

Facebook Server Configuration

Let's talk a little bit about Facebook hardware. They have 4 main building blocks:

- Compute

- Storage

- Network rack

- Power

That's really what constitutes Open Compute, and they vary the ratio of those components to address their 6 different application profiles. Facebook server hardware consists of web, database, Hadoop, Haystack (which is one of their object stores for photos), news feeds, and then archival storage. These are the applications that Facebook run, and depending on which application, different configurations provide different solutions for each of these.

Facebook looked at which applications they needed to run, and this is what drove the design principle for doing 21” racks which they then configured at the rack level.

Facebook looked at which applications they needed to run, and this is what drove the design principle for doing 21” racks which they then configured at the rack level.

- Type I is compute servers only

- Type II has multiple power shells, and is a little bit less dense

- Type III is more heavy on the storage side

- Type IV is their Object Storage

- Type V is their News Feed

- Type VI is Archival, which you can see is almost completely storage.

As you can see Facebook are designing things at the rack level and that really becomes their base components. It’s not networks and servers, those are very discrete components of the overall solution.

Penguin Computing and The OCP Foundation

We joined Open Compute Project back in 2012 (which started in 2011), it was very relevant, because Penguin actually conceived the idea of Linux servers and we happened to have a customer in 2005 called “The Facebook” that we were selling regular 19” servers to. So it was pretty apropos that we became the second solution provider within the Open Compute Project.

Fig. 3 Penguin Computing's Customers

We have done quite a few Open Compute projects - OCP is here, and it’s being deployed at scale. Penguin deploys all the OCP gear for Goldman Sachs, one of the OCP board members, in the US. We serve Bloomberg; Fidelity, we’re within the university space, Yahoo! Japan (who went from Super Micro 19” servers to using the Winterfell architecture from Facebook). One of the things about Penguin is that we are fairly flexible, in that specific deployment they decided to keep Brocade, but using Pica8 for out-of-band management. We did the full rack integration and we're able to deploy that to their new data center Wenatchee, and MicroStrategy (who’s running a business analytics cloud). We also look after the high-performance computing area within the Department of Energy labs in the US.

We have done quite a few Open Compute projects - OCP is here, and it’s being deployed at scale. Penguin deploys all the OCP gear for Goldman Sachs, one of the OCP board members, in the US. We serve Bloomberg; Fidelity, we’re within the university space, Yahoo! Japan (who went from Super Micro 19” servers to using the Winterfell architecture from Facebook). One of the things about Penguin is that we are fairly flexible, in that specific deployment they decided to keep Brocade, but using Pica8 for out-of-band management. We did the full rack integration and we're able to deploy that to their new data center Wenatchee, and MicroStrategy (who’s running a business analytics cloud). We also look after the high-performance computing area within the Department of Energy labs in the US.

I was asked this question earlier today “Why OCP, and not Cisco UCS blade architecture?”. So look at the rack [Image on Left] as a blade chassis, its integrated power etc. - it's a fully contained system. Then you have the shelves, and then the specific nodes. You can look at the open rack solution as a big blade enclosure, but what makes it different is that they have power supplies [Image below], rectifiers that provide 12 volt DC power to the entire rack, and therefore you get a lot of redundancy and a lot of reliability through your power infrastructure.

I was asked this question earlier today “Why OCP, and not Cisco UCS blade architecture?”. So look at the rack [Image on Left] as a blade chassis, its integrated power etc. - it's a fully contained system. Then you have the shelves, and then the specific nodes. You can look at the open rack solution as a big blade enclosure, but what makes it different is that they have power supplies [Image below], rectifiers that provide 12 volt DC power to the entire rack, and therefore you get a lot of redundancy and a lot of reliability through your power infrastructure.

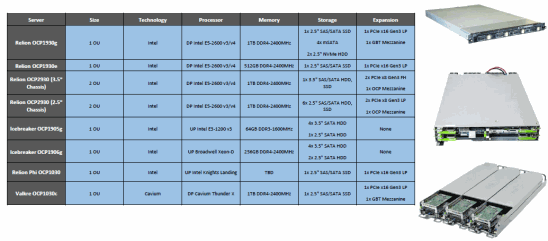

Fig. 6 - Penguin Computing's Offerings

Computer Servers Overview

Penguin provide computer servers [Fig. 6], they are traditional Winterfell 2OU, 3 node chassis, storage servers and because of our flexible architecture, we are able to provide compute servers that support ARM processors, GPU processors, and also open power processors through what Rackspace is doing with the Barreleye project.

These are different configurations, this one right here [Image on right] is our storage configuration for hard drives, developed for object storage solutions.

Here's our traditional rack, it’s the OCP 1930G, and it's an OCP inspired solution so Facebook has OCP specifically their excuse(7.57) when you hear things like Winterfell, NOX, HatTrick, Big Sur, Parmel (8.04) these are various Facebook type servers that they are using internally. We really respect what they did with the OCP Form Factor, so we did an OCP inspired solution. This is Penguin’s specific design for OCP [Image on left] and we will be contributing that back into the OCP community so if anybody wants to do their own Tundra sled, by all means they have the capability to do that.

Here's our traditional rack, it’s the OCP 1930G, and it's an OCP inspired solution so Facebook has OCP specifically their excuse(7.57) when you hear things like Winterfell, NOX, HatTrick, Big Sur, Parmel (8.04) these are various Facebook type servers that they are using internally. We really respect what they did with the OCP Form Factor, so we did an OCP inspired solution. This is Penguin’s specific design for OCP [Image on left] and we will be contributing that back into the OCP community so if anybody wants to do their own Tundra sled, by all means they have the capability to do that.

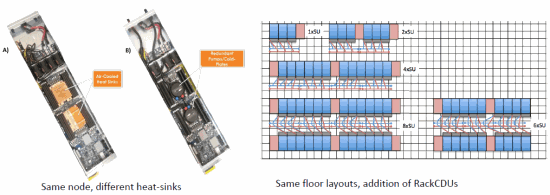

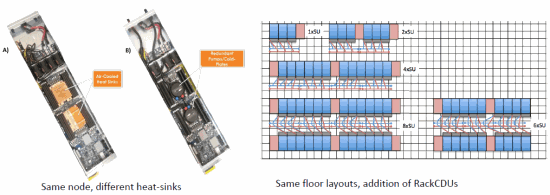

Fig. 9 - Direct-to-chip cooling

One of the interesting things, very applicable for our High-Performance Computing customers, is when they look at converting their existing Data Centers which have liquid or water cooling, Penguin has the ability to use direct-to-chip cooling [Fig. 9], which removes about 70-80% of the of the heat being generated in the entire node by removing the processor heat. We work with a company based out of Denmark to be able to provide this type of computing which allows us to get about 81-96 notes per rack in Open Compute deployments, so we can focus on super dense scale architecture within our customer set.

We are currently on the Broadwell platform, and we also have samples of Intel Skylight platform, so we're very easily able to take this specific design and work with our PCB manufacturer and replace the Broadwell chips with the Skylight chips if required. It's a simple upgrade path to be able to modularly upgrade your Data Center using Intel chips.

How to Get Started with Open Compute

One of the easiest ways to get started with OCP is through the 19” AC power Form Factor, this is in fact what we’re deploying mostly for Goldman Sachs. If you work in a big enterprise that is hesitant about getting in OCP gear because it's something completely different, you can start slow by getting this 19” Form Factor into your Datacenter, it works just like any pizza box server that you would get from Dell or HP.

This is the Winterfell server [Image on Left], as I mentioned it's 2OU high, (what I've been showing you here today is 1OU high OCP Form Factor). It's ideally suited for a lot of the Facebook workloads because they don't need super dense workloads like that provided by Tundra, they can use Winterfell for things like OpenStack where they need a lot of drives or a lot of PCIe slots to be able to drive their workload.

This is the Winterfell server [Image on Left], as I mentioned it's 2OU high, (what I've been showing you here today is 1OU high OCP Form Factor). It's ideally suited for a lot of the Facebook workloads because they don't need super dense workloads like that provided by Tundra, they can use Winterfell for things like OpenStack where they need a lot of drives or a lot of PCIe slots to be able to drive their workload.

This is the Windmill Form Factor [Image on Right] which is a 4 node 2OU system, so it's very similar to a lot of the 4 node 2OU Form Factors you might see from your regular 19-inch

infrastructure providers.

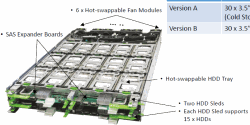

This is HatTrick [Image on Left], a 2OU 45x drive JBOD, which is ideally suited for Hadoop or doing object storage, even archival storage. You can put very large drives in there, and then a cold storage that’s only 200 watts. If you're using it for optics storage it’s 680 watts in operating efficiency.

This is HatTrick [Image on Left], a 2OU 45x drive JBOD, which is ideally suited for Hadoop or doing object storage, even archival storage. You can put very large drives in there, and then a cold storage that’s only 200 watts. If you're using it for optics storage it’s 680 watts in operating efficiency.

Fig. 13 - JBOD; Fig. 14 - JBOF

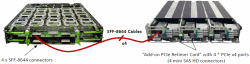

Here's more from the JBOD offering - this traditional notch JBOD [Fig 13], which is a 2OU 30 drive system and then we're coming out with the JBOF [Fig. 14] which is a “Just a Bunch Of Flash Storage” so you can also get the 30 x 2.5” hot-swappable NVMe drives so if you're doing things like database acceleration and you want the performance provided by flash drives.

That covers our computing storage, we're trying to provide a full Penguin branded solution so you can have one source to come to for integration needs. We do have what we call our Arctica gear, we've been shipping a 100G solution since last year and we do have OCP native models that plug right into the PC busbar.

Software-Defined Networking & Penguin

Penguin’s vision is to provide flexible, scalable and economic networks for people who want to evolve into modern internet or HPC Data Centers. We have Software-Defined switches, for which we partner exclusively with Cumulus Networks.

We provide multiple operating systems, we’ve concentrated on a few [Image on left]. Cumulus has been our primary partner over the years, Snaproute which is kind of new entree in open source network operating systems, and then we've been pairing with Canonical also.

We provide multiple operating systems, we’ve concentrated on a few [Image on left]. Cumulus has been our primary partner over the years, Snaproute which is kind of new entree in open source network operating systems, and then we've been pairing with Canonical also.

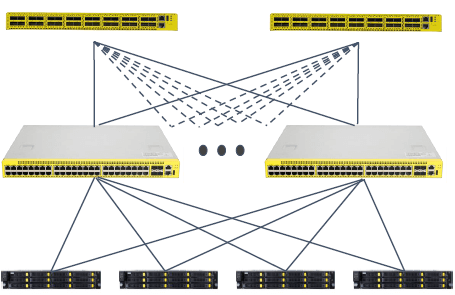

What we're seeing now is that the need for 25G, 50G 100G is here now and we are trying to target companies that are looking at Cisco and Juniper or Brocade, and we’re providing the ability to migrate up to 100G at approx. 25% - 33% of the cost. We had a very large seismic processing center that was looking at a Cisco infrastructure and big chassis switches, Penguin was able to define a Spine / Leaf network and deliver that for about 25% of the cost. It's much more economical for them to go into an open network environment than stay with the Tier-1 closed proprietary solution, or even a Britebox solution where it’s branded and integrated by single vendor.

We talked a lot about this, as I just mentioned the Spine / Leaf topologies [Image on left], it’s a preferential way to manage your network topologies for a scale-out infrastructure.

One of the things that is important to our customers as they approach open networking environments is “Penguin - we like the hardware you provide, the integration capabilities and the networking, but I'm in Czech Republic, Poland, Germany, or the UK, can you provide support?” and the answer is absolutely yes. Our idea is to be able to provide the same level support you would get from an HP or Dell, so Tier-1 type support.

Right now Penguin covers most industries across all regions worldwide. We have parts depots in the US, as well London, Singapore, Melbourne, Tokyo, Amsterdam and Frankfurt. Everything comes with a 3-year standard warranty with advanced parts replacement. Talk to EPS if you need high density servers!